Manual updating of container applications isn't a job for the meek. It includes stopping the old version, starting a new one, waiting, and checking for a successful launch. And then rolling back to the previous version in case of error. All this requires serious effort that can slow down the release process.

If you want to reduce product release time, create fault-tolerant deployments without downtime, update applications and features more often, or work with more agility — Kubernetes deployment will help you.

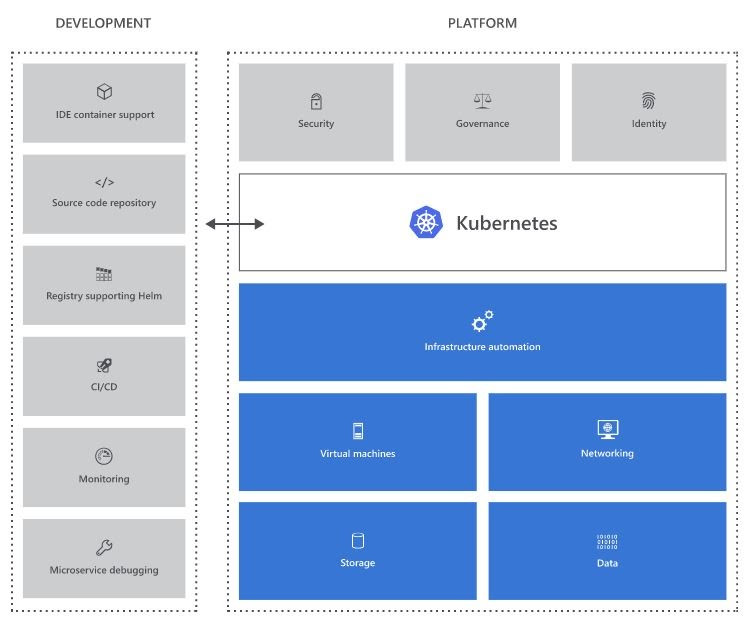

Image Source: azurecomcdn.azureedge.net

What is Kubernetes And Why is It So Popular Among IT Teams?

This young platform was introduced by Google in 2014 and has already become an industry benchmark for container orchestration. Now it is supported by the Cloud Native Computing Foundation. The service is continuously developing thanks to the community with a lot of members and partners.

The more enterprises switch to microservice and cloud-based architectures with containerization, the greater is the demand for a reliable and proven platform. Why use Kubernetes?

Kubernetes has the following advantages:

1. Kubernetes is prompt. You get a platform as a service (PaaS) with the ability to self-service, and you can build a level of hardware abstractions for developers on it. Development teams will be able to quickly and easily request and receive both necessary and additional resources (for example, for handling additional load), since all resources are provided from public infrastructure. In addition, you can use tools specifically designed for Kubernetes to automate the packaging, deployment, and testing process.

2. Kubernetes is economical. It consumes resources much more rationally compared to hypervisors and virtual machines (VMs); they are lightweight and therefore require less CPU and memory.

3. Kubernetes is independent of specific cloud platforms. Cloud service providers will take over the management of Kubernetes. It runs not only on AWS (AKS), Microsoft Azure (Cluster Service), or the Google Cloud (Kubernetes Engine) but also on-premises. When transferring workloads, you don’t have to re-engineer the apps or completely redraw the infrastructure, which allows you to unify the work on the platform and avoid being tied to a specific vendor.

This will allow you to:

- Build sophisticated container-based apps and run them anywhere.

- Transfer container applications from local machines under development to the cloud with similar tools.

- Run scalable clusters on AWS without losing compatibility with on-premises deployments.

- Develop and maintain Kubernetes-compatible software to optimize and expand app architecture.

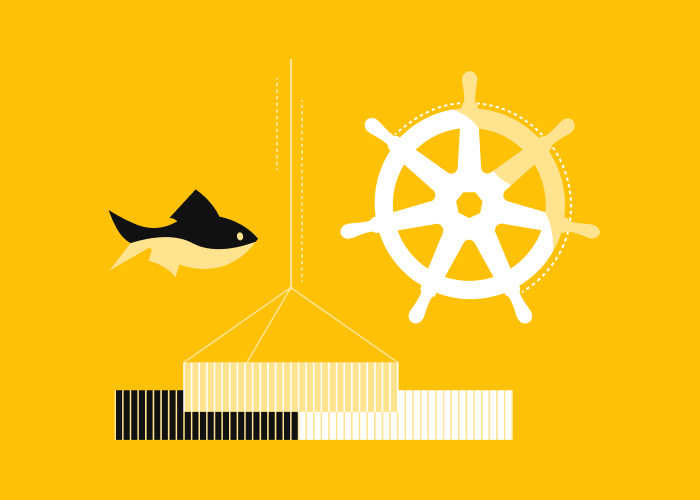

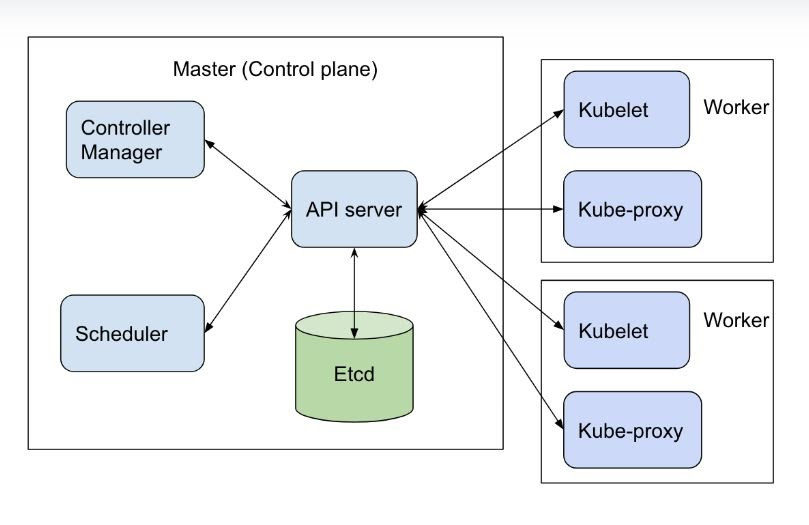

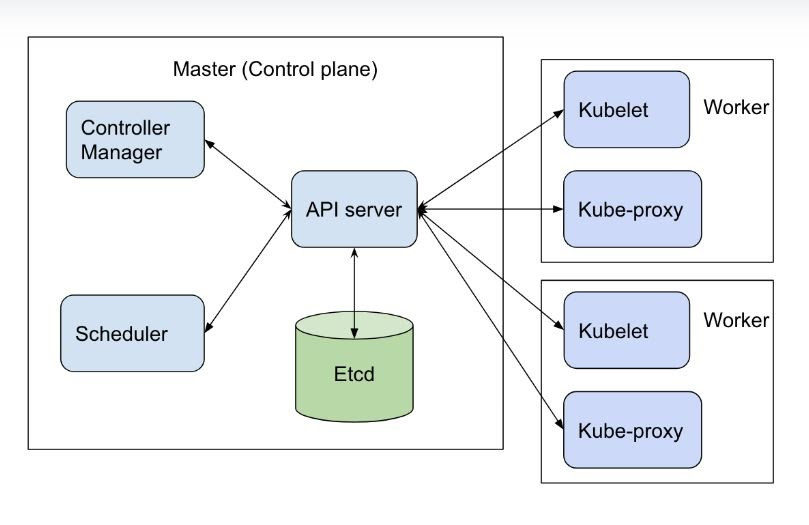

Kubernetes Architecture

The system implements the master-slave architecture: the main nodes function as the server side of Kubernetes, while the working nodes connect to the main one and act as clients.

Image Source: rancher.com

What Is a Kubernetes Deployment?

Basically, Kubernetes deployment provides declarative updates for apps. It describes the life cycle of the app: for example, which images to use, how many modules should be there, and how to update them.

The primary benefit of the deployment is to ensure that the right amount of modules is operational and available anytime. The update is recorded and has versions for pausing, continuing, or rolling back to prior versions.

How Kubernetes works

The Kubernetes deployment object allows you to:

- Expand Module or ReplicaSet;

- Update packages and ReplicaSets;

- Scale the deployment;

- Pause or continue the process;

- Roll back to previous versions.

Kubernetes in action

A deployment is a YAML object that defines the pods and the number of container instances, so-called replicas, for each pod. Specify the required number of replicas to execute the cluster through the ReplicaSet, which is included in the deployment object. If a node fails, then ReplicaSet will distribute its load to another one, running on the next accessible node.

A set of background processes (DaemonSet) starts and executes certain background processes (in the hearth) on the nodes you designate. Most often they are used to maintain and support hearths. As an example, using a set of background processes, the New Relic infrastructure deploys the infrastructure agent to all nodes in the cluster.

Basic deployment strategies of Kubernetes

- Blue-green deployment;

- Re-creation;

- Rolling;

- Canary;

- Dark (hidden) or A / B;

- Flagger and A / B.

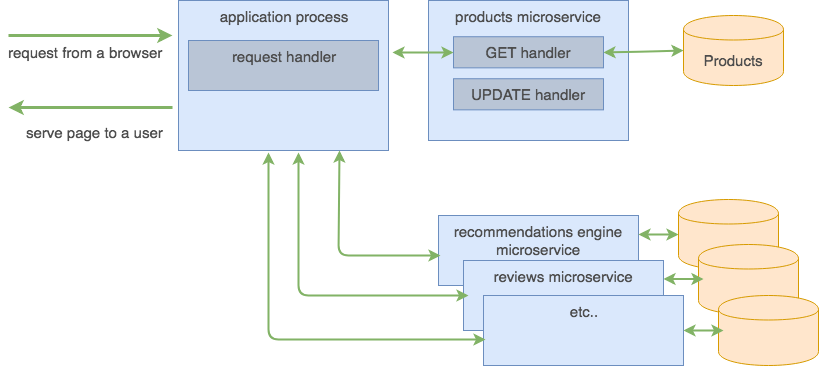

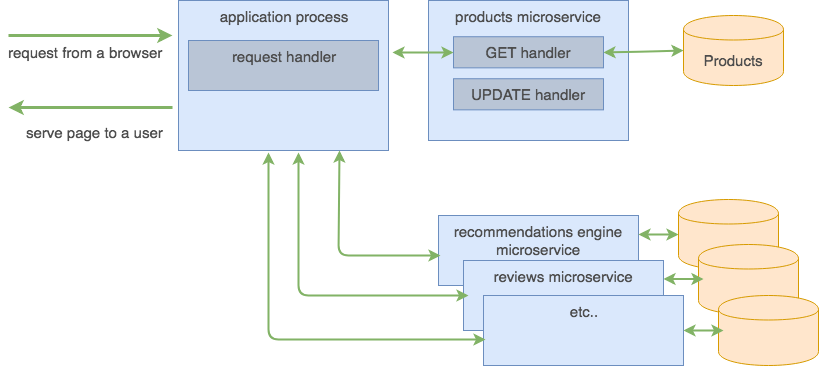

Kubernetes microservices in theoretical aspects

Previously, developers created monolithic web apps: huge codebases overgrown with functions until they turned into slow, hard-to-manage engines. Over time, however, they came to realize that microservices are more effective than a big monolith.

The introduction of a microservice-based architecture means dividing the monolith into at least two apps: frontend and backend (API).

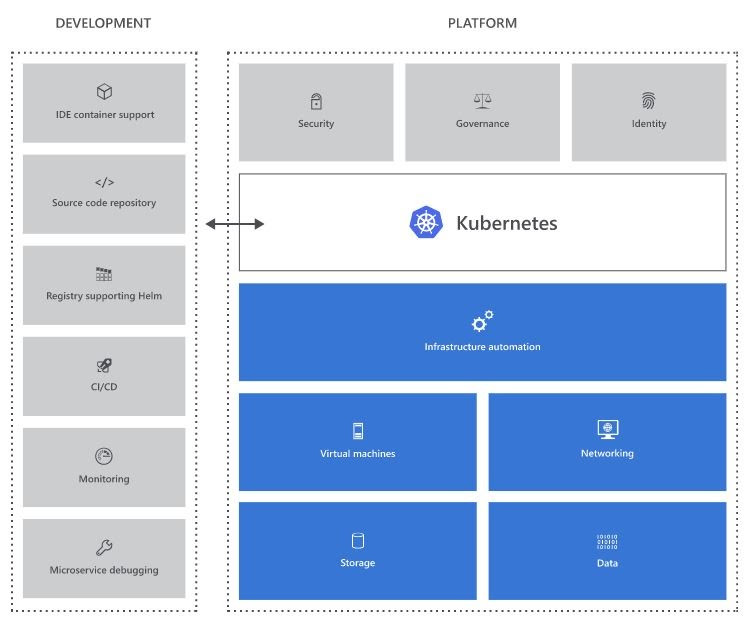

After you decide to use microservices, the question arises: which context is suitable for running them? What will make the service sustainable and convenient to manage? Let’s take a look at Docker.

Docker is cool for organizing the development cycle. It allows developers to use local containers with apps and services. This subsequently allows you to integrate with the process of continuous integration at the deployment workflow.

But how to coordinate and plan the use of numerous containers? How to make them interact with each other? How to scale multiple container instances? That's when Kubernetes come in handy.

Image Source: gravitational.com

Docker and Kubernetes - correct work together

These technologies are different, so it’s not entirely fair to compare them or to talk about which of them should be given priority.

Docker is a container site, and Kubernetes is a tool for platforms like Docker. But these technologies can be combined to successfully develop, send, and scale container apps.

Use Kubernetes and Docker to:

1. Ensure the reliability of the infrastructure and accessibility of the application. It will remain on the network even if a few nodes exit the network.

2. Scale the app. When the load on an application increases, it needs to be scaled. Just launch more containers or add more nodes to your Kubernetes cluster.

Kubernetes microservice - restructuring for freedom and autonomous work

Software architects have long tried to separate monolithic apps into reusable components. A microservice will provide:

- Simpler automated testing;

- Fast and responsible deployment models;

- Higher stability.

Another victory in the development of microservices is the ability to choose the best tool for the job. Some parts of the app may benefit from C++ speed, others from higher-level languages such as Python or JavaScript. Based on our experience, the best Kubernetes implementation expertly allocates computing resources. This will allow organizations not to pay for what they don’t use.

Why Kubernetes? The convenience of the Kubernetes monitoring system

Kubernetes provide detailed data about the use of app resources and a namespace per cluster and per module at each level. It records general time-series metrics about containers in a central database and provides a user interface for viewing this data.

An important aspect of Kubernetes monitoring is the ability to see the interaction between objects in the cluster using the Kubernetes’ built-in labeling system.

Three Kubernetes autoscaling tools

Kubernetes offers three scalability tools. Two of them, the horizontal and the vertical autoscaler, operate at the level of application abstraction. Third, the cluster autoscaler, operates at the infrastructure level.

Auto-scaling is an excellent tool that ensures the flexibility and scalability of the underlying cluster infrastructure, as well as the ability to meet the changing demands of workloads at the top.

Azure Kubernetes service discovery

Kubernetes on Azure provides a serverless Kubernetes platform with built-in continuous integration and delivery capabilities. It is responsible for security and management. It provides collaboration on a single platform to promptly and confidently work with apps.

Azure Service has options for every purpose, whether it is moving .NET apps to Windows Server containers, upgrading Java apps in Linux containers, or running microservices apps in a hybrid or border environment, or a public cloud.

Kubernetes on Azure is created through the portal website or using command-line tools.

Kubernetes on AWS: manage easier

Amazon Elastic Container Service is a managed Kubernetes service that enables Kubernetes to run on AWS. A big advantage of EKS and other similar Kubernetes hosting services is the removal of the operational burden associated with the launch of this control plane. This simplifies the Kubernetes service implementation and allows you to focus on app development.

How to Implement Kubernetes?

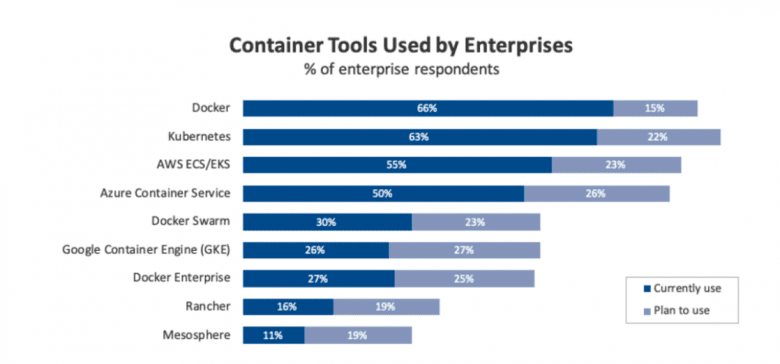

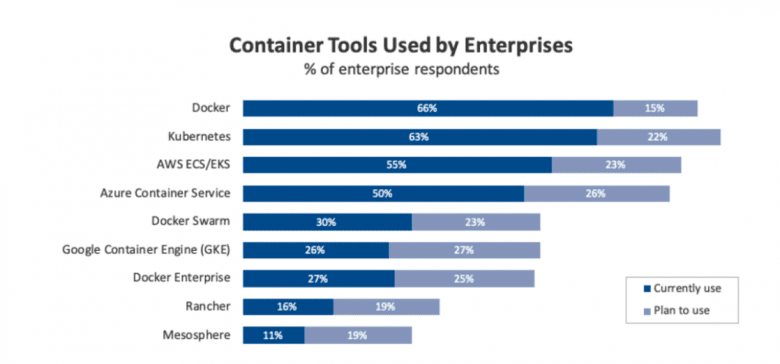

According to Flexera research, over 80% of respondents plan to use Kubernetes and Docker platforms. But 80% of organizations are unable to find container specialists. The COVID-19 crisis will intensify the implementation of cloud and related services. The container software market is estimated to grow by 33 percent per year. If we talk about containers or redesigning existing systems, organizations that think strategically will gain a competitive advantage.

Image Source: resources.flexera.com

Kubernetes implementation plan

Kubernetes significantly evolved over the five years of its existence, but did not become easier. Excessive updating creates difficulty, especially when users do not keep up with the pace of development of the central Kubernetes codebase. Just a couple of years ago, IT specialists mastered containerization, and now it’s time to understand container orchestration.

Enterprise giants that wish to switch to Kubernetes search for skilled persons who can write code, know how to manage operations, and understand the architecture of applications, repositories, and procedures to work with data.

Kubernetes: up and running with us

Would you like to plan and develop a complete, mostly automated local deployment system from scratch? Do you need optimization and technical audit? We will take over the management of the Kubernetes \ Docker platform so you can focus on more important things: delivering apps to the users.

Read more in our blog Selecting and setting up Kubernetes cloud services in your IT environment.

Conclusion

With using the Kubernetes service, the system will become more viable and easily manageable. With Kubernetes container implementation, you will surely make the most of your computing resources and save money. We have been working with DevOps for a long time, and have already completed the Kubernetes implementation step by step numerous times.

Interested in Kubernetes deployment but don’t know where to start? Contact us to discuss your project.